OUTLINE:

What Is an AI Chip? What are AI Chips Used for? Understanding Its Role in Modern Technology

251

251I. Introduction

In the rapidly evolving world of technology, AI chips are at the forefront of innovation. Did you know that the AI chip market is projected to reach $83.25 billion by 2027? This staggering statistic highlights the growing importance and impact of AI chips in various industries. But what exactly is an AI chip? And what are ai chips used for?

An AI chip, also known as an artificial intelligence chip, is a specialized piece of hardware designed to accelerate AI applications, including machine learning, neural networks, and deep learning. These chips are engineered to handle complex computations much faster and more efficiently than traditional processors. In this article, we will delve into the intricacies of AI chips, exploring their definition, functionality, types, history, applications, advantages, challenges, future trends, and how to choose and purchase the right AI chip.

II. What is an AI Chip?

- Definition:

An AI chip is a specialized type of semiconductor designed specifically to handle artificial intelligence tasks. Unlike traditional central processing units (CPUs), which are general-purpose processors capable of handling a wide variety of computing tasks, AI chips are optimized for the unique demands of AI workloads. These workloads often involve processing large amounts of data and performing complex mathematical computations required for tasks such as machine learning, neural networks, and deep learning.

AI chips leverage advanced architectures to facilitate efficient parallel processing, which is essential for handling the simultaneous computations typical in AI applications. They are engineered to deliver high performance while maintaining energy efficiency, enabling faster processing times and lower power consumption than traditional processors.

- Functionality:

AI chips function by accelerating the computational processes essential for AI tasks. These chips use a variety of architectural innovations to optimize data throughput and computational efficiency. One key feature of AI chips is their ability to perform parallel processing, which allows multiple operations to be carried out concurrently. This capability is particularly important for deep learning tasks, where large neural networks must process vast amounts of data in real-time.

AI chips also integrate high-bandwidth memory to ensure that data can be quickly accessed and processed. Specialized processing units within the chip, such as tensor cores or neural processing units (NPUs), are designed to handle specific types of calculations commonly used in AI models. These components work together to maximize the chip's efficiency and performance, making it possible to execute AI algorithms much faster than would be possible with a CPU or even a general-purpose graphics processing unit (GPU).

- Types of AI Chips:

-

Application-Specific Integrated Circuits (ASICs): ASICs are custom-designed chips tailored for specific AI tasks. Because they are purpose-built, ASICs offer high performance and efficiency for the tasks they are designed for. However, this specialization comes at the cost of flexibility, as ASICs cannot be repurposed for different tasks without significant redesign.

-

Graphics Processing Units (GPUs): Originally developed for rendering graphics in video games, GPUs have become a popular choice for AI workloads due to their excellent parallel processing capabilities. GPUs are well-suited for training deep learning models, which require the simultaneous execution of numerous small calculations. Companies like NVIDIA have further optimized GPUs for AI by incorporating tensor cores, which are specifically designed for deep learning operations.

-

Field-Programmable Gate Arrays (FPGAs): FPGAs are reconfigurable chips that can be programmed to perform specific tasks after manufacturing. This flexibility makes FPGAs a versatile option for AI applications, as they can be reprogrammed to optimize performance for different algorithms. While they may not match the efficiency of ASICs for dedicated tasks, their adaptability makes them valuable in a rapidly evolving field.

-

Neural Processing Units (NPUs): NPUs are specialized processors designed to accelerate neural network computations. These units are optimized for the matrix multiplications and other operations that are fundamental to deep learning. NPUs provide high efficiency and performance, making them ideal for applications that rely heavily on neural networks.

- Key Components:

AI chips are composed of several key components that work together to optimize performance for AI tasks. These components typically include multiple processing cores, each capable of handling a subset of the overall computation. High-bandwidth memory is integrated to facilitate rapid data transfer between the cores and the memory. Specialized processing units, such as tensor cores or NPUs, are included to handle the specific types of calculations required by AI models.

In addition to these hardware components, AI chips often feature sophisticated software support to optimize the execution of AI algorithms. This software can include drivers, libraries, and frameworks that enable developers to efficiently utilize the hardware for their specific AI applications. Together, these hardware and software components ensure that AI chips deliver the high performance and energy efficiency needed to handle the demanding workloads of modern AI applications.

III. History and Evolution of AI Chips

- Early Developments:

The journey of AI chips began in the late 20th century with the advent of the first general-purpose processors. However, these processors were not optimized for the intensive demands of AI applications. Early AI research relied heavily on software optimizations to squeeze performance out of general-purpose hardware.

- Technological Advancements:

Significant milestones include the development of GPUs for AI by companies like NVIDIA, which revolutionized the field by enabling faster and more efficient processing of AI algorithms. GPUs, with their parallel processing capabilities, allowed for significant advancements in deep learning. The introduction of ASICs and FPGAs further pushed the boundaries of what AI chips could achieve, offering dedicated solutions for specific AI tasks and applications.

- Current Trends:

Today, AI chips are continually evolving, with innovations such as tensor processing units (TPUs) by Google and neural engines by Apple. These advancements are driving the AI revolution across various sectors, from healthcare to autonomous driving. Companies are also exploring neuromorphic computing, which mimics the neural structure of the human brain, promising even greater efficiency and performance in the future.

IV. What are ai chips used for?

-

Consumer Electronics:

AI chips power smart devices like smartphones, smart home assistants, and wearables. These chips enable features like voice recognition, facial recognition, and personalized recommendations. For example, AI chips in smartphones enhance camera capabilities by enabling real-time scene recognition and optimization.

- Healthcare:

In the medical field, AI chips are used in diagnostic tools and medical imaging. They assist in early detection of diseases, personalized treatment plans, and efficient data analysis. AI chips enable real-time processing of medical images, leading to faster and more accurate diagnoses.

- Automotive Industry:

AI chips are crucial for autonomous vehicles and advanced driver-assistance systems (ADAS). They process data from sensors and cameras in real-time, ensuring safe and efficient navigation. AI chips enable features such as automatic emergency braking, lane-keeping assistance, and adaptive cruise control.

- Finance:

In finance, AI chips are used for fraud detection, algorithmic trading, and risk management. They analyze vast amounts of financial data quickly, helping firms make informed decisions. AI chips enable real-time monitoring of transactions to detect fraudulent activity and optimize trading strategies based on market trends.

- Others:

AI chips also find applications in robotics, data centers, telecommunications, and more. They are integral to the functioning of smart cities, industrial automation, and high-performance computing systems. In robotics, AI chips enable real-time processing of sensory data, allowing robots to interact intelligently with their environment.

V. Advantages of AI Chips

- Performance:

AI chips significantly enhance processing speed and efficiency, enabling faster execution of complex AI tasks. This is particularly important for applications that require real-time processing, such as autonomous driving and real-time language translation.

- Energy Efficiency:

Compared to traditional processors, AI chips consume less power, making them ideal for energy-constrained applications like mobile devices and IoT devices. This efficiency is achieved through specialized architectures and optimized processing units.

- Scalability:

AI chips can handle large-scale AI tasks, making them suitable for applications requiring extensive data processing, such as big data analytics and cloud computing. They are designed to scale efficiently, allowing for the processing of increasingly large datasets.

- Customization:

Many AI chips are tailored for specific AI applications, providing optimized performance for particular tasks. This customization allows for enhanced performance in specific use cases, such as image recognition, natural language processing, and autonomous navigation.

VI. Challenges and Limitations

- Cost:

The development and production of AI chips are expensive, posing a challenge for widespread adoption. The high cost is due to the advanced technology and materials required, as well as the specialized manufacturing processes.

- Complexity:

Designing and programming AI chips require specialized knowledge and expertise, making the development process complex. This complexity can lead to longer development times and higher costs.

- Heat Management:

AI chips generate significant heat during operation, necessitating advanced cooling solutions to prevent overheating. Effective heat management is crucial to maintaining performance and preventing damage to the chip.

- Market Competition:

The AI chip market is highly competitive, with major players like NVIDIA, AMD, and Intel continually innovating and vying for market share. This competition drives rapid advancements but also creates challenges for new entrants to establish themselves.

VII. Future Trends in AI Chips

- Emerging Technologies:

Future advancements in AI chip technology include neuromorphic computing, quantum computing, and bio-inspired processors. These technologies promise to further enhance the capabilities and efficiency of AI chips. Neuromorphic computing, for example, aims to mimic the brain's neural architecture, enabling more efficient and powerful AI processing.

- Market Trends:

The AI chip market is expected to grow exponentially, driven by increasing demand for AI applications across various industries. Market analysts predict significant growth in sectors such as healthcare, automotive, and consumer electronics. This growth is fueled by the continuous advancement of AI technologies and the increasing adoption of AI across different industries.

- Research and Development:

Ongoing R&D efforts focus on improving the performance, energy efficiency, and versatility of AI chips. Companies are investing heavily in developing next-generation AI chips to stay ahead in the competitive market. Research is also exploring new materials and architectures to enhance the performance and efficiency of AI chips.

VIII. Choosing the Right AI Chip

- Factors to Consider:

When selecting an AI chip, consider factors such as performance, power consumption, cost, and the specific requirements of your application. Understanding the trade-offs between different types of AI chips is crucial for making an informed decision. For example, if energy efficiency is a priority, an NPU might be the best choice, while an ASIC might be better for high-performance tasks.

- Comparison:

Comparing different types of AI chips helps identify the best fit for various use cases. For instance, ASICs offer high performance for dedicated tasks, while FPGAs provide flexibility for multiple applications. GPUs, on the other hand, are widely used for training deep learning models due to their parallel processing capabilities.

IX. AI Chip Makers and How to Buy

- Top AI Chip Makers:

Leading AI chip manufacturers include NVIDIA, AMD, IBM, Intel, and Google. These companies offer a range of AI chips designed for various applications, from consumer electronics to enterprise solutions. NVIDIA, for example, is known for its GPUs, while Google has developed TPUs specifically for its AI workloads.

- How to Buy AI Chips?

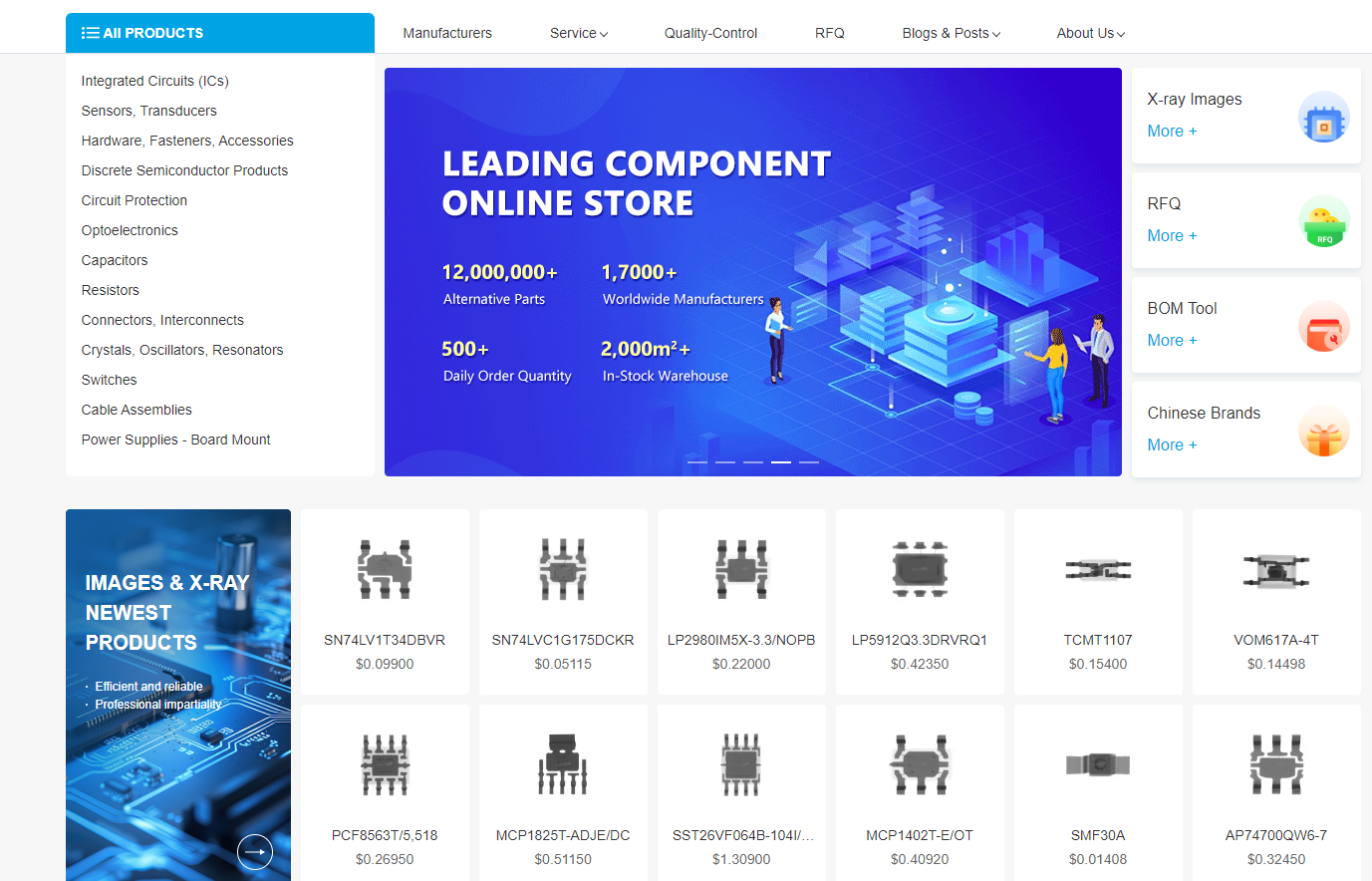

AI chips can be purchased from specialized electronic component distributors. One such distributor is Chipsmall, where you can explore and buy a wide range of AI chips and related components. When purchasing AI chips, it's important to consider factors such as compatibility with your existing hardware and software, as well as the specific requirements of your AI applications.

Visit Chipsmall for the Best Deals on AI Chips

X. Conclusion

- Summary:

The above tells what is an ai chip, what are ai chips used for, and also puts forward the suggestion of choosing the product. AI chips are transforming the technology landscape with their enhanced processing capabilities, energy efficiency, and scalability. They play a pivotal role in various industries, from healthcare and automotive to finance and consumer electronics. AI chips enable the efficient processing of complex AI tasks, making them essential for the advancement of AI technologies.

- Future Outlook:

The future of AI chips looks promising, with continuous advancements and innovations on the horizon. As AI technology evolves, AI chips will become even more integral to our daily lives, driving progress and enabling new possibilities. Future trends such as neuromorphic computing and quantum computing promise to further enhance the capabilities of AI chips.

Disclaimer: The views and opinions expressed by individual authors or forum participants on this website do not represent the views and opinions of Chipsmall, nor do they represent Chipsmall's official policy.

share this blog to: